BLIP¶

Abstract¶

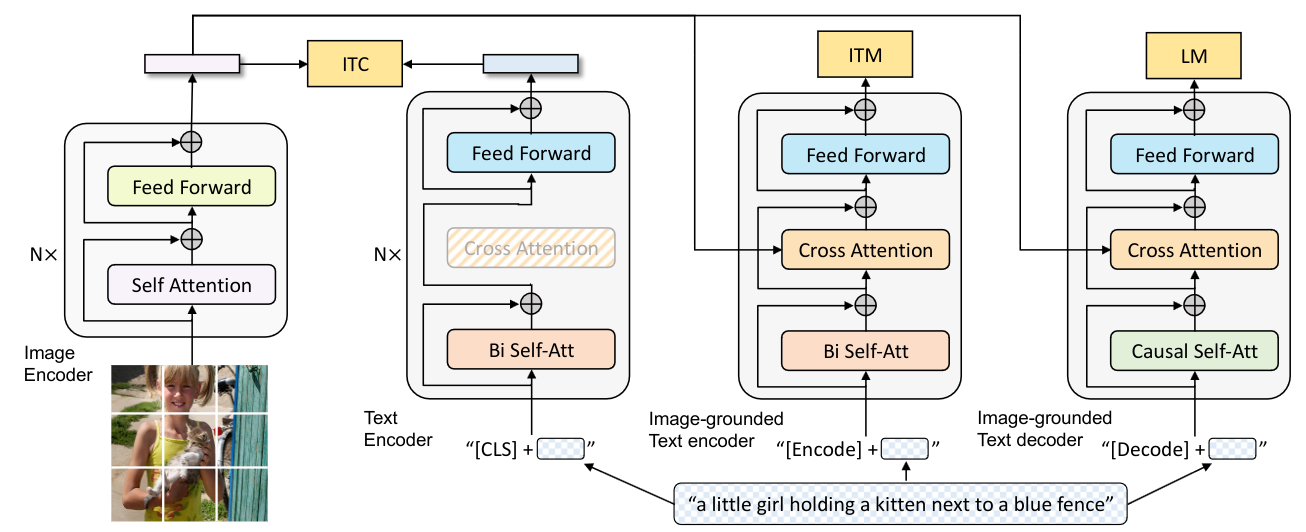

Vision-Language Pre-training (VLP) has advanced the performance for many vision-language tasks. However, most existing pre-trained models only excel in either understanding-based tasks or generation-based tasks. Furthermore, performance improvement has been largely achieved by scaling up the dataset with noisy image-text pairs collected from the web, which is a suboptimal source of supervision. In this paper, we propose BLIP, a new VLP framework which transfers flexibly to both vision-language understanding and generation tasks. BLIP effectively utilizes the noisy web data by bootstrapping the captions, where a captioner generates synthetic captions and a filter removes the noisy ones. We achieve state-of-the-art results on a wide range of vision-language tasks, such as image-text retrieval (+2.7% in average recall@1), image captioning (+2.8% in CIDEr), and VQA (+1.6% in VQA score). BLIP also demonstrates strong generalization ability when directly transferred to video-language tasks in a zero-shot manner.

How to use it?¶

from mmpretrain import inference_model

result = inference_model('blip-base_3rdparty_caption', 'demo/cat-dog.png')

print(result)

# {'pred_caption': 'a puppy and a cat sitting on a blanket'}

Prepare your dataset according to the docs.

Test:

python tools/test.py configs/blip/blip-base_8xb32_caption.py https://download.openmmlab.com/mmclassification/v1/blip/blip-base_3rdparty_coco-caption_20230419-a5b71af3.pth

Models and results¶

Image Caption on COCO¶

Model |

Params (M) |

BLEU-4 |

CIDER |

Config |

Download |

|---|---|---|---|---|---|

|

223.97 |

40.12 |

132.82 |

Image Caption on NoCaps¶

Model |

Params (M) |

SPICE |

CIDER |

Config |

Download |

|---|---|---|---|---|---|

|

223.97 |

14.69 |

109.12 |

Image Caption on Flickr30k¶

Model |

Params (M) |

SPICE |

CIDER |

Config |

Download |

|---|---|---|---|---|---|

|

223.97 |

15.58 |

68.89 |

Visual Grounding on RefCOCO¶

Model |

Params (M) |

Accuracy (testA) |

Accuracy (testB) |

Config |

Download |

|---|---|---|---|---|---|

|

498.49 |

86.14 |

77.33 |

Visual Question Answering on VQAv2¶

Model |

Params (M) |

Accuracy |

Config |

Download |

|---|---|---|---|---|

|

361.48 |

78.20 |

Visual Question Answering on OK-VQA¶

Model |

Params (M) |

Accuracy |

Config |

Download |

|---|---|---|---|---|

|

361.48 |

40.59# |

Visual Question Answering on OCR-VQA¶

Model |

Params (M) |

Accuracy |

Config |

Download |

|---|---|---|---|---|

|

361.48 |

28.30# |

Image-To-Text Retrieval on COCO¶

Model |

Params (M) |

Recall@1 |

Recall@5 |

Config |

Download |

|---|---|---|---|---|---|

|

447.49 |

82.52 |

95.34 |

Text-To-Image Retrieval on COCO¶

Model |

Params (M) |

Recall@1 |

Recall@5 |

Config |

Download |

|---|---|---|---|---|---|

|

447.49 |

64.82 |

86.28 |

Image-To-Text Retrieval on Flickr30k¶

Model |

Params (M) |

Recall@1 |

Recall@5 |

Config |

Download |

|---|---|---|---|---|---|

|

447.49 |

95.10# |

99.60# |

Text-To-Image Retrieval on Flickr30k¶

Model |

Params (M) |

Recall@1 |

Recall@5 |

Config |

Download |

|---|---|---|---|---|---|

|

447.49 |

85.26# |

96.58# |

NLVR on NLVR2¶

Model |

Params (M) |

Top-1 (%) |

Config |

Download |

|---|---|---|---|---|

|

259.37 |

82.33 |

Models with * are converted from the official repo. The config files of these models are only for inference. We haven’t reproduce the training results.

Results with # denote zero-shot evaluation. The corresponding model hasn’t been finetuned on that dataset.

Citation¶

@inproceedings{li2022blip,

title={BLIP: Bootstrapping Language-Image Pre-training for Unified Vision-Language Understanding and Generation},

author={Junnan Li and Dongxu Li and Caiming Xiong and Steven Hoi},

year={2022},

booktitle={ICML},

}